In my two most recent posts, I listed the

memoirs and biographies and followed this up with the

non-fiction I enjoyed the most in 2021. I'll leave my roundup of 'classic' fiction until tomorrow, but today I'll be going over my favourite fiction.

Books that just miss the cut here include Kingsley Amis' comic

Lucky Jim, Cormac McCarthy's

The Road (although see below for McCarthy's

Blood Meridian) and the

Complete Adventures of Tintin by Herg , the latter forming an inadvertently incisive portrait of the first half of the 20th century.

Like ever, there were a handful of books that didn't live up to prior expectations. Despite all of the hype, Emily St. John Mandel's post-pandemic dystopia

Station Eleven didn't match her superb

The Glass Hotel (one of

my favourite books of 2020). The same could be said of John le Carr 's

The Spy Who Came in from the Cold, which felt significantly shallower compared to

Tinker, Tailor, Soldier, Spy again, a favourite of last year. The strangest book (and most difficult to classify at all) was undoubtedly Patrick S skind's

Perfume: The Story of a Murderer, and the non-fiction book I disliked the most was almost-certainly

Beartown by Fredrik Bachman.

Two other mild disappointments were actually film adaptions. Specifically, the

original source for Vertigo by Pierre Boileau and Thomas Narcejac didn't match

Alfred Hitchock's 1958 masterpiece, as did James Sallis'

Drive which was made into a

superb 2011 neon-noir directed by Nicolas Winding Refn. These two films thus defy the usual trend and are 'better than the book', but that's a post for another day.

A Wizard of Earthsea (1971)

Ursula K. Le Guin

How did it come to be that Harry Potter is the publishing sensation of the century, yet Ursula K. Le Guin's Earthsea is only a popular cult novel? Indeed, the comparisons and unintentional intertextuality with Harry Potter are entirely unavoidable when reading this book, and, in almost every respect, Ursula K. Le Guin's universe comes out the victor.

In particular, the wizarding world that Le Guin portrays feels a lot more generous and humble than the class-ridden world of Hogwarts School of Witchcraft and Wizardry. Just to take one example from many, in Earthsea, magic turns out to be nurtured in a bottom-up manner within small village communities, in almost complete contrast to J. K. Rowling's concept of benevolent government departments and NGOs-like institutions, which now seems a far too New Labour for me. Indeed, imagine an entire world imbued with the kindly benevolence of Dumbledore, and you've got some of the moral palette of Earthsea.

The gently moralising tone that runs through A Wizard of Earthsea may put some people off:

Vetch had been three years at the School and soon would be made Sorcerer; he thought no more of performing the lesser arts of magic than a bird thinks of flying. Yet a greater, unlearned skill he possessed, which was the art of kindness.

Still, these parables aimed directly at the reader are fairly rare, and, for me, remain on the right side of being mawkish or hectoring. I'm thus looking forward to reading the next two books in the series soon.

Blood Meridian (1985)

Cormac McCarthy

Blood Meridian follows a band of American bounty hunters who are roaming the Mexican-American borderlands in the late 1840s. Far from being remotely swashbuckling, though, the group are collecting scalps for money and killing anyone who crosses their path. It is the most unsparing treatment of American genocide and moral depravity I have ever come across, an anti-Western that flouts every convention of the genre. Blood Meridian thus has a family resemblance to that other great anti-Western, Once Upon a Time in the West: after making a number of gun-toting films that venerate the American West (ie. his Dollars Trilogy), Sergio Leone turned his cynical eye to the western.

Yet my previous paragraph actually euphemises just how violent Blood Meridian is. Indeed, I would need to be a much better writer (indeed, perhaps McCarthy himself) to adequately 0utline the tone of this book. In a certain sense, it's less than you read this book in a conventional sense, but rather that you are forced to witness successive chapters of grotesque violence... all occurring for no obvious reason. It is often said that books 'subvert' a genre and, indeed, I implied as such above. But the term subvert implies a kind of Puck-like mischievousness, or brings to mind court jesters licensed to poke fun at the courtiers. By contrast, however, Blood Meridian isn't funny in the slightest. There isn't animal cruelty per se, but rather wanton negligence of another kind entirely. In fact, recalling a particular passage involving an injured horse makes me feel physically ill.

McCarthy's prose is at once both baroque in its language and thrifty in its presentation. As Philip Connors wrote back in 2007, McCarthy has spent forty years writing as if he were trying to expand the Old Testament, and learning that McCarthy grew up around the Church therefore came as no real surprise. As an example of his textual frugality, I often looked for greater precision in the text, finding myself asking whether who a particular 'he' is, or to which side of a fight some two men belonged to. Yet we must always remember that there is no precision to found in a gunfight, so this infidelity is turned into a virtue. It's not that these are fair fights anyway, or even 'murder': Blood Meridian is just slaughter; pure butchery. Murder is a gross understatement for what this book is, and at many points we are grateful that McCarthy spares us precision.

At others, however, we can be thankful for his exactitude. There is no ambiguity regarding the morality of the puppy-drowning Judge, for example: a Colonel Kurtz who has been given free license over the entire American south. There is, thank God, no danger of Hollywood mythologising him into a badass hero. Indeed, we must all be thankful that it is impossible to film this ultra-violent book... Indeed, the broader idea of 'adapting' anything to this world is, beyond sick.

An absolutely brutal read; I cannot recommend it highly enough.

Bodies of Light (2014)

Sarah Moss

Bodies of Light is a 2014 book by Glasgow-born Sarah Moss on the stirrings of women's suffrage within an arty clique in nineteenth-century England. Set in the intellectually smoggy cities of Manchester and London, this poignant book follows the studiously intelligent Alethia 'Ally' Moberly who is struggling to gain the acceptance of herself, her mother and the General Medical Council. You can read my full review from July.

House of Leaves (2000)

Mark Z. Danielewski

House of Leaves is a remarkably difficult book to explain. Although the plot refers to a fictional documentary about a family whose house is somehow larger on the inside than the outside, this quotidian horror premise doesn't explain the complex meta-commentary that Danielewski adds on top. For instance, the book contains a large number of pseudo-academic footnotes (many of which contain footnotes themselves), with references to scholarly papers, books, films and other articles. Most of these references are obviously fictional, but it's the kind of book where the joke is that some of them are not. The format, structure and typography of the book is highly unconventional too, with extremely unusual page layouts and styles.

It's the sort of book and idea that should be a tired gimmick but somehow isn't. This is particularly so when you realise it seems specifically designed to create a fandom around it and to manufacturer its own 'cult' status, something that should be extremely tedious. But not only does this not happen, House of Leaves seems to have survived through two exhausting decades of found footage: The Blair Witch Project and Paranormal Activity are, to an admittedly lesser degree, doing much of the same thing as House of Leaves.

House of Leaves might have its origins in Nabokov's Pale Fire or even Derrida's Glas, but it seems to have more in common with the claustrophobic horror of Cube (1997). And like all of these works, House of Leaves book has an extremely strange effect on the reader or viewer, something quite unlike reading a conventional book. It wasn't so much what I got out of the book itself, but how it added a glow to everything else I read, watched or saw at the time. An experience.

Milkman (2018)

Anna Burns

This quietly dazzling novel from Irish author Anna Burns is full of intellectual whimsy and oddball incident. Incongruously set in 1970s Belfast during The Irish Troubles, Milkman's 18-year-old narrator (known only as middle sister ), is the kind of dreamer who walks down the street with a Victorian-era novel in her hand.

It's usually an error for a book that specifically mention other books, if only because inviting comparisons to great novels is grossly ill-advised. But it is a credit to Burns' writing that the references here actually add to the text and don't feel like they are a kind of literary paint by numbers. Our humble narrator has a boyfriend of sorts, but the figure who looms the largest in her life is a creepy milkman an older, married man who's deeply integrated in the paramilitary tribalism. And when gossip about the narrator and the milkman surfaces, the milkman beings to invade her life to a suffocating degree.

Yet this milkman is not even a milkman at all. Indeed, it's precisely this kind of oblique irony that runs through this daring but darkly compelling book.

The First Fifteen Lives of Harry August (2014)

Claire North

Harry August is born, lives a relatively unremarkable life and finally dies a relatively unremarkable death. Not worth writing a novel about, I suppose. But then Harry finds himself born again in the very same circumstances, and as he grows from infancy into childhood again, he starts to remember his previous lives. This loop naturally drives Harry insane at first, but after finding that suicide doesn't stop the quasi-reincarnation, he becomes somewhat acclimatised to his fate. He prospers much better at school the next time around and is ultimately able to make better decisions about his life, especially when he just happens to know how to stay out of trouble during the Second World War.

Yet what caught my attention in this 'soft' sci-fi book was not necessarily the book's core idea but rather the way its connotations were so intelligently thought through. Just like in a musical theme and varations, the success of any concept-driven book is far more a product of how the implications of the key idea are played out than how clever the central idea was to begin with. Otherwise, you just have another neat Borges short story: satisfying, to be sure, but in a narrower way.

From her relatively simple premise, for example, North has divined that if there was a community of people who could remember their past lives, this would actually allow messages and knowledge to be passed backwards and forwards in time. Ah, of course! Indeed, this very mechanism drives the plot: news comes back from the future that the progress of history is being interfered with, and, because of this, the end of the world is slowly coming. Through the lives that follow, Harry sets out to find out who is passing on technology before its time, and work out how to stop them.

With its gently-moralising romp through the salient historical touchpoints of the twentieth century, I sometimes got a whiff of Forrest Gump. But it must be stressed that this book is far less certain of its 'right-on' liberal credentials than Robert Zemeckis' badly-aged film. And whilst we're on the topic of other media, if you liked the underlying conceit behind Stuart Turton's The Seven Deaths of Evelyn Hardcastle yet didn't enjoy the 'variations' of that particular tale, then I'd definitely give The First Fifteen Lives a try. At the very least, 15 is bigger than 7.

More seriously, though, The First Fifteen Lives appears to reflect anxieties about technology, particularly around modern technological accelerationism. At no point does it seriously suggest that if we could somehow possess the technology from a decade in the future then our lives would be improved in any meaningful way. Indeed, precisely the opposite is invariably implied. To me, at least, homo sapiens often seems to be merely marking time until we can blow each other up and destroying the climate whilst sleepwalking into some crisis that might precipitate a thermonuclear genocide sometimes seems to be built into our DNA. In an era of cli-fi fiction and our non-fiction newspaper headlines, to label North's insight as 'prescience' might perhaps be overstating it, but perhaps that is the point: this destructive and negative streak is universal to all periods of our violent, insecure species.

The Goldfinch (2013)

Donna Tartt

After Breaking Bad, the second biggest runaway success of 2014 was probably Donna Tartt's doorstop of a novel, The Goldfinch. Yet upon its release and popular reception, it got a significant number of bad reviews in the literary press with, of course, an equal number of predictable think pieces claiming this was sour grapes on the part of the cognoscenti. Ah, to be in 2014 again, when our arguments were so much more trivial.

For the uninitiated, The Goldfinch is a sprawling bildungsroman that centres on Theo Decker, a 13-year-old whose world is turned upside down when a terrorist bomb goes off whilst visiting the Metropolitan Museum of Art, killing his mother among other bystanders. Perhaps more importantly, he makes off with a painting in order to fulfil a promise to a dying old man: Carel Fabritius' 1654 masterpiece The Goldfinch. For the next 14 years (and almost 800 pages), the painting becomes the only connection to his lost mother as he's flung, almost entirely rudderless, around the Western world, encountering an array of eccentric characters.

Whatever the critics claimed, Tartt's near-perfect evocation of scenes, from the everyday to the unimaginable, is difficult to summarise. I wouldn't label it 'cinematic' due to her evocation of the interiority of the characters. Take, for example: Even the suggestion that my father had close friends conveyed a misunderstanding of his personality that I didn't know how to respond it's precisely this kind of relatable inner subjectivity that cannot be easily conveyed by film, likely is one of the main reasons why the 2019 film adaptation was such a damp squib. Tartt's writing is definitely not 'impressionistic' either: there are many near-perfect evocations of scenes, even ones we hope we cannot recognise from real life. In particular, some of the drug-taking scenes feel so credibly authentic that I sometimes worried about the author herself.

Almost eight months on from first reading this novel, what I remember most was what a joy this was to read. I do worry that it won't stand up to a more critical re-reading (the character named Xandra even sounds like the pharmaceuticals she is taking), but I think I'll always treasure the first days I spent with this often-beautiful novel.

Beyond Black (2005)

Hilary Mantel

Published about five years before the hyperfamous Wolf Hall (2004), Hilary Mantel's Beyond Black is a deeply disturbing book about spiritualism and the nature of Hell, somewhat incongruously set in modern-day England. Alison Harte is a middle-aged physic medium who works in the various towns of the London orbital motorway. She is accompanied by her stuffy assistant, Colette, and her spirit guide, Morris, who is invisible to everyone but Alison.

However, this is no gentle and musk-smelling world of the clairvoyant and mystic, for Alison is plagued by spirits from her past who infiltrate her physical world, becoming stronger and nastier every day. Alison's smiling and rotund persona thus conceals a truly desperate woman: she knows beyond doubt the terrors of the next life, yet must studiously conceal them from her credulous clients.

Beyond Black would be worth reading for its dark atmosphere alone, but it offers much more than a chilling and creepy tale. Indeed, it is extraordinarily observant as well as unsettlingly funny about a particular tranche of British middle-class life. Still, the book's unnerving nature that sticks in the mind, and reading it noticeably changed my mood for days afterwards, and not necessarily for the best.

The Wall (2019)

John Lanchester

The Wall tells the story of a young man called Kavanagh, one of the thousands of Defenders standing guard around a solid fortress that envelopes the British Isles. A national service of sorts, it is Kavanagh's job to stop the so-called Others getting in. Lanchester is frank about what his wall provides to those who stand guard: the Defenders of the Wall are conscripted for two years on the Wall, with no exceptions, giving everyone in society a life plan and a story.

But whilst The Wall is ostensibly about a physical wall, it works even better as a story about the walls in our mind. In fact, the book blends together of some of the most important issues of our time: climate change, increasing isolation, Brexit and other widening societal divisions. If you liked P. D. James' The Children of Men you'll undoubtedly recognise much of the same intellectual atmosphere, although the sterility of John Lanchester's dystopia is definitely figurative and textual rather than literal.

Despite the final chapters perhaps not living up to the world-building of the opening, The Wall features a taut and engrossing narrative, and it undoubtedly warrants even the most cursory glance at its symbolism. I've yet to read something by Lanchester I haven't enjoyed (even his short essay on cheating in sports, for example) and will be definitely reading more from him in 2022.

The Only Story (2018)

Julian Barnes

The Only Story is the story of Paul, a 19-year-old boy who falls in love with 42-year-old Susan, a married woman with two daughters who are about Paul's age. The book begins with how Paul meets Susan in happy (albeit complicated) circumstances, but as the story unfolds, the novel becomes significantly more tragic and moving.

Whilst the story begins from the first-person perspective, midway through the book it shifts into the second person, and, later, into the third as well. Both of these narrative changes suggested to me an attempt on the part of Paul the narrator (if not Barnes himself), to distance himself emotionally from the events taking place. This effect is a lot more subtle than it sounds, however: far more prominent and devastating is the underlying and deeply moving story about the relationship ends up. Throughout this touching book, Barnes uses his mastery of language and observation to avoid the saccharine and the maudlin, and ends up with a heart-wrenching and emotive narrative. Without a doubt, this is the saddest book I read this year.

It s mostly a robotic existence atm. I try to distract myself via movies, web series, the web, books, etc. whatever can take my mind off. From the day she died to date, I have a lower back pain which acts as a reminder. It s been a month, I eat, drink, and am surviving but still feel empty. I do things suggested by extended family but within there is no feeling, just emptiness :(. I have no clue if things will get better and even if I do want the change. I clearly have no idea, so let me share a little about what I know.

It s mostly a robotic existence atm. I try to distract myself via movies, web series, the web, books, etc. whatever can take my mind off. From the day she died to date, I have a lower back pain which acts as a reminder. It s been a month, I eat, drink, and am surviving but still feel empty. I do things suggested by extended family but within there is no feeling, just emptiness :(. I have no clue if things will get better and even if I do want the change. I clearly have no idea, so let me share a little about what I know.

To my mind, the phone is middling yet a solid phone. I had the phone drop accidentally at times but not a single scratch or anything like that. One can look at the specs in greater detail on fccd.io. Before the recent price drop, as I shared it was a mid-range phone so am gonna review it on that basis itself. One of the first things I did is to buy a plastic cover as well as a cover shield even though the original one is meant to work for a year or more. This was simply for added protection and it has served me to date. Even with the additional weight, I can easily use it with one hand. It only becomes problematic when using chatting apps. such as Whatsapp, Telegram, Quicksy and a few others where it comes with Samsung keyboard with the divided/split keyboard. The A.I. for guessing words and sentences are spot-on when you are doing it in English but if you try a mixture of Hinglish (Hindi and English) that becomes a bit of a nightmare. Tryng to each A.I; new words is something of a task. I wish there was an interface in which I could train the A.I. so it could be served for Hinglish words also. I do think it does, but it s too rudimentary as it is to be any useful at least where it is now.

To my mind, the phone is middling yet a solid phone. I had the phone drop accidentally at times but not a single scratch or anything like that. One can look at the specs in greater detail on fccd.io. Before the recent price drop, as I shared it was a mid-range phone so am gonna review it on that basis itself. One of the first things I did is to buy a plastic cover as well as a cover shield even though the original one is meant to work for a year or more. This was simply for added protection and it has served me to date. Even with the additional weight, I can easily use it with one hand. It only becomes problematic when using chatting apps. such as Whatsapp, Telegram, Quicksy and a few others where it comes with Samsung keyboard with the divided/split keyboard. The A.I. for guessing words and sentences are spot-on when you are doing it in English but if you try a mixture of Hinglish (Hindi and English) that becomes a bit of a nightmare. Tryng to each A.I; new words is something of a task. I wish there was an interface in which I could train the A.I. so it could be served for Hinglish words also. I do think it does, but it s too rudimentary as it is to be any useful at least where it is now.

To my mind, the phone is middling yet a solid phone. I had the phone drop accidentally at times but not a single scratch or anything like that. One can look at the specs in greater detail on fccd.io. Before the recent price drop, as I shared it was a mid-range phone so am gonna review it on that basis itself. One of the first things I did is to buy a plastic cover as well as a cover shield even though the original one is meant to work for a year or more. This was simply for added protection and it has served me to date. Even with the additional weight, I can easily use it with one hand. It only becomes problematic when using chatting apps. such as Whatsapp, Telegram, Quicksy and a few others where it comes with Samsung keyboard with the divided/split keyboard. The A.I. for guessing words and sentences are spot-on when you are doing it in English but if you try a mixture of Hinglish (Hindi and English) that becomes a bit of a nightmare. Tryng to each A.I; new words is something of a task. I wish there was an interface in which I could train the A.I. so it could be served for Hinglish words also. I do think it does, but it s too rudimentary as it is to be any useful at least where it is now.

To my mind, the phone is middling yet a solid phone. I had the phone drop accidentally at times but not a single scratch or anything like that. One can look at the specs in greater detail on fccd.io. Before the recent price drop, as I shared it was a mid-range phone so am gonna review it on that basis itself. One of the first things I did is to buy a plastic cover as well as a cover shield even though the original one is meant to work for a year or more. This was simply for added protection and it has served me to date. Even with the additional weight, I can easily use it with one hand. It only becomes problematic when using chatting apps. such as Whatsapp, Telegram, Quicksy and a few others where it comes with Samsung keyboard with the divided/split keyboard. The A.I. for guessing words and sentences are spot-on when you are doing it in English but if you try a mixture of Hinglish (Hindi and English) that becomes a bit of a nightmare. Tryng to each A.I; new words is something of a task. I wish there was an interface in which I could train the A.I. so it could be served for Hinglish words also. I do think it does, but it s too rudimentary as it is to be any useful at least where it is now.

In my two most recent posts, I listed the

In my two most recent posts, I listed the

Several issues were brought before the Debian Community team regarding responsiveness, tone, and needed software updates to

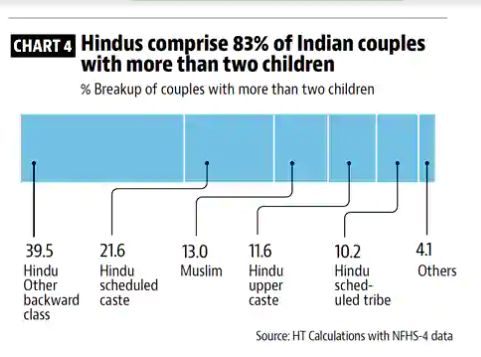

Several issues were brought before the Debian Community team regarding responsiveness, tone, and needed software updates to  Hindus comprise 83% of Indian couples with more than two child children

Hindus comprise 83% of Indian couples with more than two child children

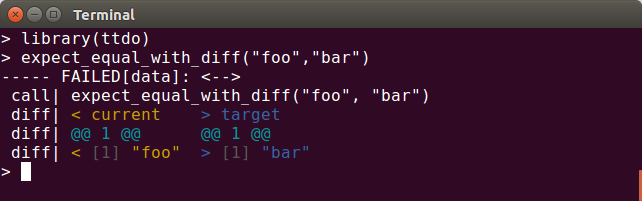

A new (and genuinely) minor release of our

A new (and genuinely) minor release of our  This release cleans up one microscopic wart of an R warning when installing and byte-compiling the package due to a

This release cleans up one microscopic wart of an R warning when installing and byte-compiling the package due to a  We all know these situations when we receive an email asking Can you

check the design of X, I need a reply by tonight. Or an instant

message: My website went down, can you check? Another email: I

canceled a plan at the hosting company, can you restore my website as

fast as possible? A phone call: The TLS certificate didn t get

updated, and now we can t access service Y. Yet another email: Our

super important medical advice website is suddenly being censored in

country Z, can you help?

Everyone knows those messages that have URGENT in capital letters in

the email subject. It might be that some of them really are urgent.

Others are the written signs of someone having a hard time properly

planning their own work and passing their delays on to someone who comes

later in the creation or production chain. And others again come from

people who are overworked and try to delegate some of their tasks to a

friendly soul who is likely to help.

We all know these situations when we receive an email asking Can you

check the design of X, I need a reply by tonight. Or an instant

message: My website went down, can you check? Another email: I

canceled a plan at the hosting company, can you restore my website as

fast as possible? A phone call: The TLS certificate didn t get

updated, and now we can t access service Y. Yet another email: Our

super important medical advice website is suddenly being censored in

country Z, can you help?

Everyone knows those messages that have URGENT in capital letters in

the email subject. It might be that some of them really are urgent.

Others are the written signs of someone having a hard time properly

planning their own work and passing their delays on to someone who comes

later in the creation or production chain. And others again come from

people who are overworked and try to delegate some of their tasks to a

friendly soul who is likely to help.

Last Christmas, to make room for a tree, I dis-assembled my

Last Christmas, to make room for a tree, I dis-assembled my

TL;DR: The zoom meeting link you have probably look like this:

TL;DR: The zoom meeting link you have probably look like this:

It finally happened

On the 6th of April 2017, I finally took the plunge and

It finally happened

On the 6th of April 2017, I finally took the plunge and

As a cat owner, being surprised by cat intelligence delights me. They re not exactly smart like a human, but they are smart in cattish ways. The more I watch them and try to sort out what they re thinking, the more it pleases me to discover they can solve problems and adapt in recognizably intelligent ways, sometimes unique to each individual cat. Each time that happens, it evokes in me affectionate wonder.

Today, I had one of those joyful moments.

First, you need to understand that some months ago, I thought I had my male cat all figured out with respect to mealtimes. I had been cleaning up after my oafish boy who made a watery mess on the floor from his mother s bowl each morning. I was slightly annoyed, but was mostly curious, and had a hunch. A quick search of the web confirmed it: my cat was left-handed. Not only that, but I learned this is typical for males, whereas females tend to be right-handed. Right away, I knew what I had to do: I adjusted the position of their water bowls relative to their food, swapping them from right to left; the messy morning feedings ceased. I congratulated myself for my cleverness.

You see, after the swap, as he hooked the kibbles with his left paw out of the right-hand bowl, they would land immediately on the floor where he could give them chase. The swap caused the messes to cease because before, his left-handed scoops would land the kibbles in the water to the right; he would then have to scoop the kibble out onto the floor, sprinkling water everywhere! Furthermore, the sodden kibble tended to not skitter so much, decreasing his fun. Or so I thought. Clearly, I reasoned, having sated himself on the entire contents of his own bowl, he turned to pilfering his mother s leftovers for some exciting kittenish play. I had evidence to back it up, too: he and his mother both seem to enjoy this game, a regular fixture of their mealtime routines. She, too, is adept at hooking out the kibbles, though mysteriously, without making a mess in her water, whichever way the bowls are oriented. I chalked this up to his general clumsiness of movement vs. her daintiness and precision, something I had observed many times before.

Come to think of it, lately, I ve been seeing more mess around his mother s bowl again. Hmm. I don t know why I didn t stop to consider why

And then my cat surprised me again.

This morning, with Shadow behind my back as I sat at my computer, finishing up his morning meal at his mother s bowl, I thought I heard something odd. Or rather, I didn t hear something. The familiar skitter-skitter sound of kibbles evading capture was missing. So I turned and looked. My dear, devious boy had squished his overgrown body behind his mother s bowls, nudging them ever so slightly askew to fit the small space. Now the bowl orientation was swapped back again. Stunned, I watched him carefully flip out a kibble with his left paw. Plop! Into the water on the right. Concentrating, he fished for it. A miss! He casually licked the water from his paw. Another try. Swoop! Plop, onto the floor. No chase now, just satisfied munching of his somewhat mushy kibble. And then it dawned on me that I had got it somewhat wrong. Yes, he enjoyed Chase the Kibble, like his mom, but I never recognized he had been indulging in a favourite pastime, peculiarly his own

I had judged his mealtime messes as accidents, a very human way of thinking about my problem. Little did I know, it was deliberate! His private game was Bobbing for Kibbles. I don t know if it s the altered texture, or dabbling in the bowl, but whatever the reason, due to my meddling, he had been deprived of this pleasure. No worries, a thwarted cat will find a way. And that is the joy of cat intelligence.

As a cat owner, being surprised by cat intelligence delights me. They re not exactly smart like a human, but they are smart in cattish ways. The more I watch them and try to sort out what they re thinking, the more it pleases me to discover they can solve problems and adapt in recognizably intelligent ways, sometimes unique to each individual cat. Each time that happens, it evokes in me affectionate wonder.

Today, I had one of those joyful moments.

First, you need to understand that some months ago, I thought I had my male cat all figured out with respect to mealtimes. I had been cleaning up after my oafish boy who made a watery mess on the floor from his mother s bowl each morning. I was slightly annoyed, but was mostly curious, and had a hunch. A quick search of the web confirmed it: my cat was left-handed. Not only that, but I learned this is typical for males, whereas females tend to be right-handed. Right away, I knew what I had to do: I adjusted the position of their water bowls relative to their food, swapping them from right to left; the messy morning feedings ceased. I congratulated myself for my cleverness.

You see, after the swap, as he hooked the kibbles with his left paw out of the right-hand bowl, they would land immediately on the floor where he could give them chase. The swap caused the messes to cease because before, his left-handed scoops would land the kibbles in the water to the right; he would then have to scoop the kibble out onto the floor, sprinkling water everywhere! Furthermore, the sodden kibble tended to not skitter so much, decreasing his fun. Or so I thought. Clearly, I reasoned, having sated himself on the entire contents of his own bowl, he turned to pilfering his mother s leftovers for some exciting kittenish play. I had evidence to back it up, too: he and his mother both seem to enjoy this game, a regular fixture of their mealtime routines. She, too, is adept at hooking out the kibbles, though mysteriously, without making a mess in her water, whichever way the bowls are oriented. I chalked this up to his general clumsiness of movement vs. her daintiness and precision, something I had observed many times before.

Come to think of it, lately, I ve been seeing more mess around his mother s bowl again. Hmm. I don t know why I didn t stop to consider why

And then my cat surprised me again.

This morning, with Shadow behind my back as I sat at my computer, finishing up his morning meal at his mother s bowl, I thought I heard something odd. Or rather, I didn t hear something. The familiar skitter-skitter sound of kibbles evading capture was missing. So I turned and looked. My dear, devious boy had squished his overgrown body behind his mother s bowls, nudging them ever so slightly askew to fit the small space. Now the bowl orientation was swapped back again. Stunned, I watched him carefully flip out a kibble with his left paw. Plop! Into the water on the right. Concentrating, he fished for it. A miss! He casually licked the water from his paw. Another try. Swoop! Plop, onto the floor. No chase now, just satisfied munching of his somewhat mushy kibble. And then it dawned on me that I had got it somewhat wrong. Yes, he enjoyed Chase the Kibble, like his mom, but I never recognized he had been indulging in a favourite pastime, peculiarly his own

I had judged his mealtime messes as accidents, a very human way of thinking about my problem. Little did I know, it was deliberate! His private game was Bobbing for Kibbles. I don t know if it s the altered texture, or dabbling in the bowl, but whatever the reason, due to my meddling, he had been deprived of this pleasure. No worries, a thwarted cat will find a way. And that is the joy of cat intelligence.